Implementing Ethical AI Systems: Dual-Factor Agency, As a Marker of Manipulation or Disempowerment in AI Strategy Implementation

How do we cultivate workplaces where ethics meet efficiency?

How do we guide behavior without slipping into manipulation? Are all interactions inherently manipulative, or can we clearly distinguish honest persuasion from deceptive control?

Not every exchange, nor every influence, is manipulative. Could not all communication simply be regarded as a means of manipulation? Is all interaction inherently manipulative?

Certainly not.

To untangle this complexity, we need a structured yet deeply human-centric lens.

Interaction, communication, and even intentional persuasion is not inherently manipulative, but the stakes are high. Especially when it comes to AI implementation and development strategies.

The recent MIT study: "Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task", investigated the neural and behavioral effects of using large language model (LLM) assistants for essay writing.

The study found that the 54 participants, divided into three groups, LLM, Search Engine, and Brain-only groups, exhibited significantly inhibited brain activity and the lowest perception of ownership of the written product in the LLM -only group. Additionally, the LLM only group consistently underperformed on other cognitive-behavioral tasks over a 4 month period, compared to the brain-only and search engine groups. (Kosmyna et al., 2025)

These results suggest potential long-term educational implications regarding the reliance on LLMs. Many people are using this study to show how AI can produce brainroot, cognitive decline, and decreased ownership in the workplace.

But does it? It’s not as simple as it sounds.

AI can also instigate increased productivity and capability in the workplace.

AI is being baked into almost every major platform and service package.

AI is here to stay.

The way we implement new change or technology into existing systems is the differentiator between success and failure, between manipulative or coercive systems, or human-centric, ethical ones.

This is part one of a three part series that proposes an implementation framework that works for change management, but is particularly suited to AI implementation and strategy development.

The framework is: Agency, Trust, Policy (ATP) - In biology, ATP (adenosine triphosphate) is a crucial molecule that acts as the primary energy carrier for cellular processes. It's often called the "energy currency" of the cell because it provides readily available energy for various cellular activities.

This ATP framework for implementation is (while somewhat cheesy) the “energy” carrier for ethical AI implementation and policy. It energizes the entire system, and allows ideas, information, and control to flow appropriately between human and synthetic agents and principals.

Understanding Agency

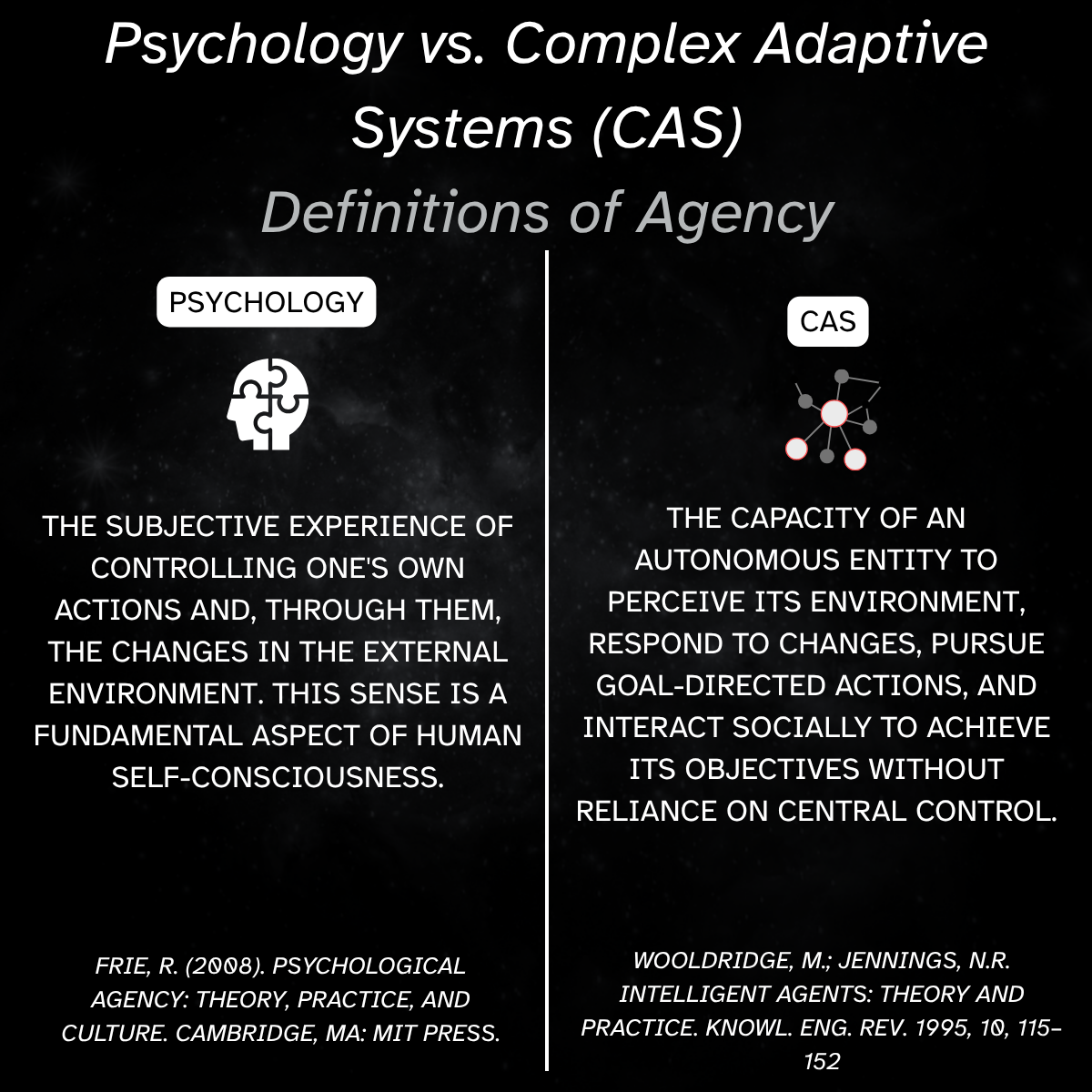

Agency is a multifaceted concept. To build truly ethical AI systems, we must reconcile its two dominant definitions: the complex adaptive systems (CAS) definition and the definition from psychology.

Complex Adaptive Systems describe agency as the capacity of individual entities, agents, to intentionally exert influence within systems. Agency here is inherently emergent: smaller agents combine to form larger, more complex systems that direct their collective environment in a non-linear way.

Agency is discussed in various contexts: as an organization, within cognitive behavioral psychology and neurology, and as a component of complex adaptive systems. Our focus today will be on the intersection of complex adaptive systems and neurology. Understanding the principle of agency at this convergence is crucial for combating manipulation and for developing systems that are neither manipulative nor coercive.

For example, an organization may be viewed as a single agent exerting control over other agents in a larger macro system while being composed of smaller subsystems and sub-agents agents with their own sphere of control and agency. For example, the FBI or the CIA, or Apple or Microsoft, or your local police department may all be considered agents. They can be viewed as acting with intentionality, with a cause-and-effect relationship, and exerting control over certain aspects of systems in which they interact, whether it be governance, law enforcement, or the tech sector.

Psychology's definition of agency has far more to do with the subjective self-experience of being in control of one's actions.

The psychological definition of agency includes the perception of being in control of one's actions and thoughts. This is very different from complex adaptive systems thinking in that it is not based in a reality of control, but in the subjective experience, the perception of control, whether or not control is actually there.

It still has to do with actions and cause and effect, and voluntary response of one agent within its system. However, the psychological definition is almost always talking about an individual person or sometimes in neurology, an individual animal as well.

A comparison of complex adaptive systems and psychology’s view of agency.

Distinguishing Manipulation from Persuasion

If we put these two definitions together it will provide us a starting framework for differentiating between manipulation and persuasion, and mere interaction in a given system.

Marketing and propaganda, state-level information and manipulation agencies, and even individuals who are participating in manipulative behavior, such as gaslighting, are operating off of the psychological definition, not the complex adaptive systems definition. Effective manipulation frequently involves increasing a subjective sense of perceived agency, while reducing or funneling objective, agentic control towards a narrow set of outcomes.

A manipulator is increasing psychological agency to increase their own agentic control.

The opposite can be true as well. Psychological agency can be reduced while agentic control is either unchanged or even increased. This is frequently a tactic of coercion from dictatorial regimes. They want resistance to seem futile. They want to remove the perception of options and alternatives from the general population. For example, the Soviet Union rolling out unifying propaganda, and enforcing rituals that made it seem like the party and the people were of one accord, encouraging fear of rebellion because you didn’t know who was performative, and who was a true-believer.

[THE PEOPLE AND THE PARTY ARE UNITED] Retrieved from: https://upnorth.eu/seven-soviet-era-tips-for-running-a-successful-police-state/

A Combined Definition of Agency

Merging these definitions gives us a more robust definition of agency, a dual-factor model:

Dual-Factor Agency: The capacity of an agent to accurately perceive their control (PA) within a system, coupled with their genuine ability to autonomously direct and influence that system (AC).

We can quantify this relationship as the Agency Delta (AΔ):

AΔ=Perceived Agency (PA)−Agentic Control (AC)

AΔ=PA - AC

This formula can provide a quantifiable metric of qualitative indicators, survey data, workflow assessments, or organizational goals, providing an indicator of system health as it pertains to a specific goal, function, or workflow. The score of both PA and AC must be standardized, and use a like-like data collection method. Whether it be survey data, performance data, or otherwise. Hovering around the 0 line is the objective, where perceived control matches objective capability within the system. The larger the negative delta, the more disempowered and potentially coercive the system is; The larger the positive delta, the more manipulative or deceptive the system might be.

Near Zero Delta (Reality): Indicates potential ethical alignment. Perception matches reality, fostering trust and accountability.

Large Positive Delta (Illusion of Control): Reveals manipulation. Employees feel empowered but have little real control, breeding cynicism and burnout.

Large Negative Delta (Disempowerment Gap): Indicates inefficiency and moral failure. People possess actual agency but feel powerless due to confusion or poor feedback, leading to helplessness and underutilized potential.

Now, let's consider incarceration as a counterexample, where an individual's agency is overtly limited by force. While this scenario doesn't align with the subtle nature of most manipulative techniques, it highlights why a multi-faceted definition of agency and supporting analytic structures are imperative.

Conversely, one might argue that diminishing the perception of agency to enhance actual control can be ethical and non-manipulative.

A graphic showing the ADelta, Dual Factor Agency calculation.

Implications for Ethical AI Implementation

I would like to turn this definition into a thought experiment specifically related to ethical AI implementation strategies. In a previous work, we discussed how the artificial intelligence race is similar to Promethean fire and requires thoughtful, unified ethical guidelines. Here, I hope to continue that discussion and propose that one of the marks of an ethical system, when it comes to the integration of artificial intelligence into personal, business, and governmental life, is to apply this test.

This is critical to avoid potentially disastrous outcomes such as cognitive and agency decline in humans within the system.

Ask these three questions:

Does the integration of AI offload, circumvent, augment, or manipulate human agency within the system?

Is the system of control being distributed to where humans are able to maximize agency, or is it creating the majority of the agency for the AI? Does the AI have more tangible control?

Does the proposed implementation of AI increase the perception of human agency without increasing their level of control?

All three of these questions are vitally important but may not have super clear answers. But by stopping and thinking deeply, we can begin to point out why certain approaches will fail more readily than others, leading to the undesirable outcomes we seek to avoid.

Are we relinquishing too much of our genuine control to synthetic intelligence, mistakenly entrusting systems whose agency lies beyond authentic human oversight?

Could our interactions with AI unknowingly be fostering self-deception, heightening our perceived autonomy while gradually eroding objective agency?

Ethical AI implementation must intentionally protect and amplify dual-factor human agency. This means balancing the perceived experience of control with tangible, meaningful authority, ensuring trust remains robust between humans and synthetic agents. All organizations inherently function as complex adaptive systems.

Large language models and neural networks, inspired by biomimicry and human language, operate as intricate adaptive systems themselves.

Meanwhile, the human psyche is uniquely complex, embodied, enacted, and emergent from lived experience.

Therefore, effective AI implementation must embrace complexity rather than oversimplify it. It must anchor itself firmly in human experience, always aligning technological advancement with authentic human agency.

This holistic understanding allows us to confidently provide AI implementation services across diverse scenarios, from standard business processes and cutting-edge applications to theoretical explorations and niche cases. Working with people means navigating the inherent intricacies of agency. The most successful organizations intentionally craft environments that maximize dual-factor agency, empowering both individuals and the collective through a thoughtful distribution of agency, trust, and policy.

Next time, we'll delve into the second principle of the ATP framework: the principle of Trust within organizational systems, unpacking its essential role in ethical AI integration and the creation of genuinely collaborative, non-manipulative environments.

Until then, we warmly invite you to reach out and schedule a complimentary consultation. Whether your needs involve AI integration, cultivating a thriving organizational culture, or implementing meaningful behavioral interventions, we're here to support you in building dynamic systems that truly amplify human agency, aligning perceived autonomy with real, actionable control.

Sources:

Agency, AI, & Complex Systems

Moloi, T., & Marwala, T. (2020). The agency theory. In Artificial Intelligence in Economics and Finance Theories (pp. 95–102). Springer. https://doi.org/10.1007/978-3-030-42962-1_11

This chapter examines how AI enhances and complicates classical agency frameworks by improving visibility and shifting evaluation of agent behavior within principal-agent relationships.

Dung, L. (2024). Understanding artificial agency. The Philosophical Quarterly. https://philarchive.org/rec/DUNUAA

Develops a multidimensional model of AI agency—including autonomy, goal-directedness, and environmental influence—providing a philosophical foundation for understanding when AI acts as an ethical agent.

Yolles, M. (2019). The complexity continuum, part 2: Modelling harmony. Kybernetes, 48, 1626–1652. https://doi.org/10.1108/K-06-2018-0338

Integrates agency theory with complex adaptive systems, offering frameworks to analyze how agency emerges across nested systems—supporting your article’s multi-layer agency model.

Human–AI Agency Perception

Legaspi, R., Xu, W., Konishi, T., Wada, S., Kobayashi, N., Naruse, Y., & Ishikawa, Y. (2024). The sense of agency in human–AI interactions. Knowledge-Based Systems, 286, 111298. https://www.sciencedirect.com/science/article/pii/S0950705123010468

Investigates how interactions with AI impact individuals' perceived control and autonomy, aligning with your concern about perception vs. actual agency in AI systems.

Loeff, A., Bassi, I., Kapila, S., & Gamper, J. (2019). AI ethics for systemic issues: A structural approach. arXiv. https://arxiv.org/abs/1911.03216

Critically examines ethics frameworks that focus on individual agency while neglecting systemic dynamics, urging a complex adaptive systems lens to mitigate manipulation risks.

Ravven, H. M., & Clark, A. (2008). The self beyond itself: Further reflection on Spinoza's systems theory of ethics. https://www.iiis.org/Ravven.pdf

Argues that moral agency emerges from interlinked, nested systems.

Kosmyna, N., Hauptmann, E., Yuan, Y. T., Situ, J., Liao, X.-H., Beresnitzky, A. V., Braunstein, I., & Maes, P. (2025). Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task. ArXiv.org. https://arxiv.org/abs/2506.08872

Lists the impacts on memory, perception of ownership, and brain activity in LLM users, Search users, and brain-only users when producing an essay.

Psychological & Neurological Perspectives on Agency

Balconi, M. (2010). The sense of agency in psychology and neuropsychology. In Neuroscience of consciousness and self-awareness (pp. 3–22). https://doi.org/10.1007/978-88-470-1587-6_1

Bridges psychological and neurological understandings of agency, exploring subjective experience of control—supporting the dual-layer agency definition you propose.

Tomasello, M. (2022). The evolution of agency. Annual Review of Psychology. https://www.penguinrandomhouse.com/books/710650/the-evolution-of-agency-by-michael-tomasello/

Explores how agency arises through social and developmental processes, highlighting emergent, nested structures of agency across individual and group systems.

Segundo‑Ortín, M. (2020). Agency from a radical embodied standpoint: An ecological‑enactive proposal. Frontiers in Psychology, 11. https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2020.01319/full

Presents an embodied, ecological view of agency where subjective experience and environmental interaction co-emerge.